Jonathan Ntege Lubwama

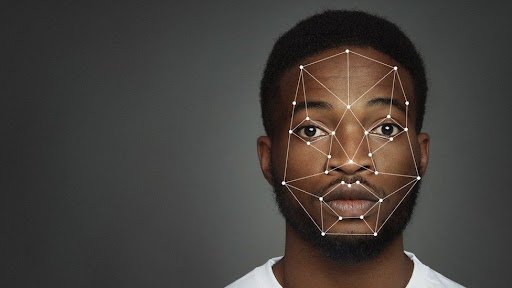

- 6 Black people have been wrongfully arrested after police used the artificial intelligence application of facial recognition technology

- The AI market is expected to reach $2.57B by 2032, but big tech has been warning of its dangers

In a disconcerting revelation, every individual erroneously apprehended due to the application of artificial intelligence (AI) facial recognition technology has been of African American descent. Police are increasingly using AI, but the technology is flawed and heavily biased towards people of color, according to a study by criminal justice experts. All of this is happening while the AI market is expected to hit around $2.57 billion by 2032, and big tech continues to warn of its dangers.

Why This Matters: The growing reliance on AI in law enforcement is concerning, especially considering the rapid expansion of the AI market, which was valued at $454.12 billion in 2022. As the AI market continues to grow, tech giants have been vocal about the potential pitfalls of AI, particularly in relation to racial bias.

This unsettling pattern underscores the warnings issued by civil liberties groups, tech experts, and activists over the years, cautioning against the potential for facial recognition technology to amplify racial disparities in law enforcement. A senior attorney from the American Civil Liberties Union (ACLU) has labeled the use of facial recognition in policing as an “extremely dangerous practice.” This assertion is backed by six known instances of wrongful arrests, all involving Black individuals, attributed to the misuse of this technology.

A research paper by criminal justice experts Thaddeus L. Johnson and Nastasha N. Johnson, published earlier this year, reveals that facial recognition technology leads to the arrest of Black individuals at disproportionately high rates. The researchers attribute this to a combination of factors, including the underrepresentation of Black faces in the algorithms’ training data sets, an overreliance on the infallibility of these programs, and the amplification of officers’ biases by these issues The study also highlights that Black individuals are overrepresented in mugshot databases, which further biases AI data sets. As a result, AI is more likely to incorrectly identify Black faces as criminal, leading to the wrongful targeting and arrest of innocent individuals.

What’s Next: The current situation underscores the urgent need for a comprehensive review of the use of AI in law enforcement. It is crucial to address these racial disparities and ensure that the technology is used responsibly and ethically. The future of AI in law enforcement hangs in the balance, and the next steps taken will significantly impact its trajectory.

CBX Vibe: “Robot Rock” Daft Punk